C言語でLinuxにおける資源アクセスプロトコルPIPの実装を教えて!

こういった悩みにお答えします.

本記事の信頼性

- リアルタイムシステムの研究歴12年.

- 東大教員の時に,英語でOS(Linuxカーネル)の授業.

- 2012年9月~2013年8月にアメリカのノースカロライナ大学チャペルヒル校(UNC)コンピュータサイエンス学部で客員研究員として勤務.C言語でリアルタイムLinuxの研究開発.

- プログラミング歴15年以上,習得している言語: C/C++,Python,Solidity/Vyper,Java,Ruby,Go,Rust,D,HTML/CSS/JS/PHP,MATLAB,Assembler (x64,ARM).

- 東大教員の時に,C++言語で開発した「LLVMコンパイラの拡張」,C言語で開発した独自のリアルタイムOS「Mcube Kernel」をGitHubにオープンソースとして公開.

- 2020年1月~現在はアメリカのノースカロライナ州チャペルヒルにあるGuarantee Happiness LLCのCTOとしてECサイト開発やWeb/SNSマーケティングの業務.2022年6月~現在はアメリカのノースカロライナ州チャペルヒルにあるJapanese Tar Heel, Inc.のCEO兼CTO.

- 最近は自然言語処理AIとイーサリアムに関する有益な情報発信に従事.

- (AI全般を含む)自然言語処理AIの論文の日本語訳や,AIチャットボット(ChatGPT,Auto-GPT,Gemini(旧Bard)など)の記事を50本以上執筆.アメリカのサンフランシスコ(広義のシリコンバレー)の会社でプロンプトエンジニア・マネージャー・Quality Assurance(QA)の業務委託の経験あり.

- (スマートコントラクトのプログラミングを含む)イーサリアムや仮想通貨全般の記事を200本以上執筆.イギリスのロンドンの会社で仮想通貨の英語の記事を日本語に翻訳する業務委託の経験あり.

こういった私から学べます.

C言語を独学で習得することは難しいです.

私にC言語の無料相談をしたいあなたは,公式LINE「ChishiroのC言語」の友だち追加をお願い致します.

私のキャパシティもあり,一定数に達したら終了しますので,今すぐ追加しましょう!

独学が難しいあなたは,元東大教員がおすすめするC言語を学べるオンラインプログラミングスクール5社で自分に合うスクールを見つけましょう.後悔はさせません!

目次

【C言語】Linuxにおける資源アクセスプロトコルPIPの実装

C言語でLinuxにおける資源アクセスプロトコルPIPの実装を紹介します.

本記事は以下の記事を理解していることを前提としています.

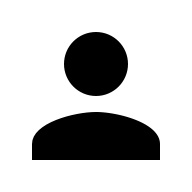

本記事で利用するタスクセット(タスク数は3)における各々のタスクの動作は以下になります.

タスク1とタスク3のクリティカルセクションは共通しています.

- タスク1:2秒スリープ,1秒実行,クリティカルセクションに進入,1秒実行,クリティカルセクションから退出,1秒実行

- タスク2:4秒スリープ,1秒実行

- タスク3:1秒実行,クリティカルセクションに進入,3秒実行,クリティカルセクションから退出,1秒実行

異なる優先度の3スレッドを実行

異なる優先度の3スレッドを実行するコードは以下になります.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 |

/* * Author: Hiroyuki Chishiro * License: 2-Clause BSD */ #define _GNU_SOURCE #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include <linux/types.h> #include <sys/syscall.h> #include <sys/sysinfo.h> #include <sys/mman.h> #include <pthread.h> #include <math.h> struct sched_attr { __u32 size; __u32 sched_policy; __u64 sched_flags; /* SCHED_NORMAL, SCHED_BATCH */ __s32 sched_nice; /* SCHED_FIFO, SCHED_RR */ __u32 sched_priority; /* SCHED_DEADLINE (nsec) */ __u64 sched_runtime; __u64 sched_deadline; __u64 sched_period; /* Utilization hints */ __u32 sched_util_min; __u32 sched_util_max; }; #define SEC2NSEC(sec) ((sec) * 1000 * 1000 * 1000L) int sched_setattr(pid_t pid, const struct sched_attr *attr, unsigned int flags) { return syscall(__NR_sched_setattr, pid, attr, flags); } int sched_getattr(pid_t pid, struct sched_attr *attr, unsigned int size, unsigned int flags) { return syscall(__NR_sched_getattr, pid, attr, size, flags); } #define TASK_NUM 3 #define ARGS_NUM 2 pthread_mutex_t shared_mutex; pthread_mutexattr_t mutex_prioinherit; int migrate_thread_to_cpu(pid_t tid, int target_cpu) { cpu_set_t *cpu_set; size_t size; int nr_cpus; int ret; if ((nr_cpus = get_nprocs()) == -1) { perror("get_nprocs()"); exit(1); } if (target_cpu < 0 || target_cpu >= nr_cpus) { fprintf(stderr, "Error: target_cpu %d is in [0,%d).\n", target_cpu, nr_cpus); exit(2); } if ((cpu_set = CPU_ALLOC(nr_cpus)) == NULL) { perror("CPU_ALLOC"); exit(3); } size = CPU_ALLOC_SIZE(nr_cpus); CPU_ZERO_S(size, cpu_set); CPU_SET_S(target_cpu, size, cpu_set); if ((ret = sched_setaffinity(tid, size, cpu_set)) == -1) { perror("sched_setaffinity"); exit(4); } CPU_FREE(cpu_set); return ret; } void timespec_diff(struct timespec *result, const struct timespec *end, const struct timespec *begin) { if (end->tv_nsec - begin->tv_nsec < 0) { result->tv_sec = end->tv_sec - begin->tv_sec - 1; result->tv_nsec = end->tv_nsec - begin->tv_nsec + 1000000000; } else { result->tv_sec = end->tv_sec - begin->tv_sec; result->tv_nsec = end->tv_nsec - begin->tv_nsec; } } void execute(int n, int id) { struct timespec th_now, th_next, diff; int counter = 0; printf("Task %d begins execution.\n", id); if (clock_gettime(CLOCK_THREAD_CPUTIME_ID, &th_now) != 0) { perror("clock_gettime"); exit(1); } do { counter++; if (counter % 1000000 == 0) { printf("Task %d is running on cpu %d.\n", id, sched_getcpu()); } if (clock_gettime(CLOCK_THREAD_CPUTIME_ID, &th_next) != 0) { perror("clock_gettime"); exit(2); } timespec_diff(&diff, &th_next, &th_now); } while (SEC2NSEC(diff.tv_sec) + diff.tv_nsec <= SEC2NSEC(n)); printf("Task %d ends execution.\n", id); } void *func(void *args) { struct timespec *tp = (struct timespec *)((void **) args)[0]; int id = *(int *)((void **) args)[1]; struct timespec next = {tp->tv_sec, tp->tv_nsec}; struct sched_attr attr, attr2; int ret; unsigned int flags = 0; attr.size = sizeof(attr); attr.sched_policy = SCHED_FIFO; attr.sched_flags = 0; attr.sched_nice = 0; attr.sched_priority = sched_get_priority_max(SCHED_FIFO) - id - 1; attr.sched_runtime = attr.sched_period = attr.sched_deadline = 0; // not used attr.sched_util_min = 0; attr.sched_util_max = 0; if ((ret = sched_setattr(0, &attr, flags)) < 0) { perror("sched_setattr"); exit(1); } if ((ret = sched_getattr(0, &attr2, sizeof(struct sched_attr), flags)) < 0) { perror("sched_getattr"); exit(2); } // sleep until begin time. if (clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &next, NULL) != 0) { perror("clock_nanosleep"); exit(3); } switch (id) { case 1: sleep(2); execute(1, id); if (pthread_mutex_lock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot lock\n"); exit(4); } printf("Task %d enters a critical section.\n", id); execute(1, id); printf("Task %d leaves a critical section.\n", id); if (pthread_mutex_unlock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot unlock\n"); exit(5); } execute(1, id); break; case 2: sleep(4); execute(1, id); break; case 3: execute(1, id); if (pthread_mutex_lock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot lock\n"); exit(6); } printf("Task %d enters a critical section.\n", id); execute(3, id); printf("Task %d leaves a critical section.\n", id); if (pthread_mutex_unlock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot unlock\n"); exit(7); } execute(1, id); break; default: fprintf(stderr, "Error: unknown id: %d\n", id); break; } return NULL; } int main(void) { pthread_t threads[TASK_NUM]; int ids[TASK_NUM]; struct timespec tp; void *args[TASK_NUM][ARGS_NUM]; int i; pid_t tid = gettid(); // migrate thread to CPU 0. migrate_thread_to_cpu(tid, 0); if (mlockall(MCL_CURRENT | MCL_FUTURE) == -1) { perror("mlockall"); exit(1); } if (clock_gettime(CLOCK_MONOTONIC, &tp) != 0) { perror("clock_getitme"); exit(2); } // begin time is current time + 1.X [s] (at least 1 second waiting from current time). tp.tv_sec += 2; tp.tv_nsec = 0; if (pthread_mutexattr_init(&mutex_prioinherit)) { perror("pthread_mutexattr_init"); exit(3); } if (pthread_mutex_init(&shared_mutex, &mutex_prioinherit)) { perror("pthread_mutex_init"); exit(4); } for (i = 0; i < TASK_NUM; i++) { ids[i] = i + 1; args[i][0] = &tp; args[i][1] = &ids[i]; pthread_create(&threads[i], NULL, func, args[i]); } for (i = 0; i < TASK_NUM; i++) { pthread_join(threads[i], NULL); } if (pthread_mutexattr_destroy(&mutex_prioinherit)) { perror("pthread_mutexattr_destroy"); exit(3); } if (pthread_mutex_destroy(&shared_mutex)) { perror("pthread_mutexattr_destroy"); exit(4); } return 0; } |

実行結果は以下になります.

上図の通り,タスクが3->1->3->2->3->1->3の順番で実行することを確認しましょう!

【第28回】元東大教員から学ぶリアルタイムシステム「資源アクセスプロトコル」で述べた通り,優先度逆転が発生していることがわかります.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

$ gcc priority_inversion.c $ sudo ./a.out Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. Task 3 enters a critical section. Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 2 begins execution. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 ends execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. Task 3 leaves a critical section. Task 1 enters a critical section. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 1 leaves a critical section. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. |

異なる優先度の3スレッドをPIPで実行

異なる優先度の3スレッドをPIPで実行するコードは以下になります.

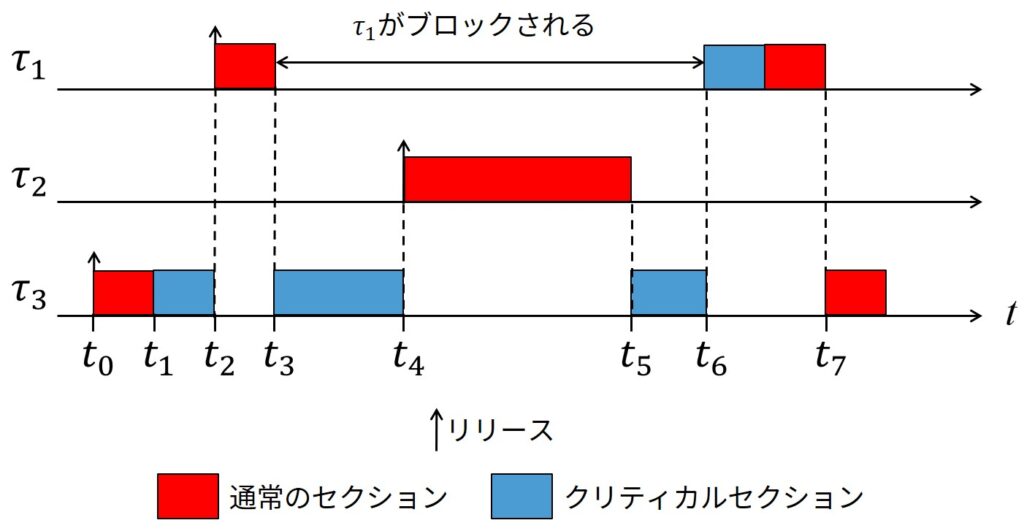

優先度逆転が発生するコードとの違いは,main関数でpthread_mutexattr_setprotocol関数を呼び出して優先度継承を設定していることです.

つまり,PIPは透過的なプロトコルなので,タスクのコード(func関数)を変更せずに実装することができます.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 |

/* * Author: Hiroyuki Chishiro * License: 2-Clause BSD */ #define _GNU_SOURCE #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include <linux/types.h> #include <sys/syscall.h> #include <sys/sysinfo.h> #include <sys/mman.h> #include <pthread.h> #include <math.h> struct sched_attr { __u32 size; __u32 sched_policy; __u64 sched_flags; /* SCHED_NORMAL, SCHED_BATCH */ __s32 sched_nice; /* SCHED_FIFO, SCHED_RR */ __u32 sched_priority; /* SCHED_DEADLINE (nsec) */ __u64 sched_runtime; __u64 sched_deadline; __u64 sched_period; /* Utilization hints */ __u32 sched_util_min; __u32 sched_util_max; }; #define SEC2NSEC(sec) ((sec) * 1000 * 1000 * 1000L) int sched_setattr(pid_t pid, const struct sched_attr *attr, unsigned int flags) { return syscall(__NR_sched_setattr, pid, attr, flags); } int sched_getattr(pid_t pid, struct sched_attr *attr, unsigned int size, unsigned int flags) { return syscall(__NR_sched_getattr, pid, attr, size, flags); } #define TASK_NUM 3 #define ARGS_NUM 2 pthread_mutex_t shared_mutex; pthread_mutexattr_t mutex_prioinherit; int migrate_thread_to_cpu(pid_t tid, int target_cpu) { cpu_set_t *cpu_set; size_t size; int nr_cpus; int ret; if ((nr_cpus = get_nprocs()) == -1) { perror("get_nprocs()"); exit(1); } if (target_cpu < 0 || target_cpu >= nr_cpus) { fprintf(stderr, "Error: target_cpu %d is in [0,%d).\n", target_cpu, nr_cpus); exit(2); } if ((cpu_set = CPU_ALLOC(nr_cpus)) == NULL) { perror("CPU_ALLOC"); exit(3); } size = CPU_ALLOC_SIZE(nr_cpus); CPU_ZERO_S(size, cpu_set); CPU_SET_S(target_cpu, size, cpu_set); if ((ret = sched_setaffinity(tid, size, cpu_set)) == -1) { perror("sched_setaffinity"); exit(4); } CPU_FREE(cpu_set); return ret; } void timespec_diff(struct timespec *result, const struct timespec *end, const struct timespec *begin) { if (end->tv_nsec - begin->tv_nsec < 0) { result->tv_sec = end->tv_sec - begin->tv_sec - 1; result->tv_nsec = end->tv_nsec - begin->tv_nsec + 1000000000; } else { result->tv_sec = end->tv_sec - begin->tv_sec; result->tv_nsec = end->tv_nsec - begin->tv_nsec; } } void execute(int n, int id) { struct timespec th_now, th_next, diff; int counter = 0; printf("Task %d begins execution.\n", id); if (clock_gettime(CLOCK_THREAD_CPUTIME_ID, &th_now) != 0) { perror("clock_gettime"); exit(1); } do { counter++; if (counter % 1000000 == 0) { printf("Task %d is running on cpu %d.\n", id, sched_getcpu()); } if (clock_gettime(CLOCK_THREAD_CPUTIME_ID, &th_next) != 0) { perror("clock_gettime"); exit(2); } timespec_diff(&diff, &th_next, &th_now); } while (SEC2NSEC(diff.tv_sec) + diff.tv_nsec <= SEC2NSEC(n)); printf("Task %d ends execution.\n", id); } void *func(void *args) { struct timespec *tp = (struct timespec *)((void **) args)[0]; int id = *(int *)((void **) args)[1]; struct timespec next = {tp->tv_sec, tp->tv_nsec}; struct sched_attr attr, attr2; int ret; unsigned int flags = 0; attr.size = sizeof(attr); attr.sched_policy = SCHED_FIFO; attr.sched_flags = 0; attr.sched_nice = 0; attr.sched_priority = sched_get_priority_max(SCHED_FIFO) - id - 1; attr.sched_runtime = attr.sched_period = attr.sched_deadline = 0; // not used attr.sched_util_min = 0; attr.sched_util_max = 0; if ((ret = sched_setattr(0, &attr, flags)) < 0) { perror("sched_setattr"); exit(1); } if ((ret = sched_getattr(0, &attr2, sizeof(struct sched_attr), flags)) < 0) { perror("sched_getattr"); exit(2); } // sleep until begin time. if (clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &next, NULL) != 0) { perror("clock_nanosleep"); exit(3); } switch (id) { case 1: sleep(2); execute(1, id); if (pthread_mutex_lock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot lock\n"); exit(4); } printf("Task %d enters a critical section.\n", id); execute(1, id); printf("Task %d leaves a critical section.\n", id); if (pthread_mutex_unlock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot unlock\n"); exit(5); } execute(1, id); break; case 2: sleep(4); execute(1, id); break; case 3: execute(1, id); if (pthread_mutex_lock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot lock\n"); exit(6); } printf("Task %d enters a critical section.\n", id); execute(3, id); printf("Task %d leaves a critical section.\n", id); if (pthread_mutex_unlock(&shared_mutex) != 0) { fprintf(stderr, "Error: cannot unlock\n"); exit(7); } execute(1, id); break; default: fprintf(stderr, "Error: unknown id: %d\n", id); break; } return NULL; } int main(void) { pthread_t threads[TASK_NUM]; int ids[TASK_NUM]; struct timespec tp; void *args[TASK_NUM][ARGS_NUM]; int i; pid_t tid = gettid(); // migrate thread to CPU 0. migrate_thread_to_cpu(tid, 0); if (mlockall(MCL_CURRENT | MCL_FUTURE) == -1) { perror("mlockall"); exit(1); } if (clock_gettime(CLOCK_MONOTONIC, &tp) != 0) { perror("clock_getitme"); exit(2); } // begin time is current time + 1.X [s] (at least 1 second waiting from current time). tp.tv_sec += 2; tp.tv_nsec = 0; if (pthread_mutexattr_init(&mutex_prioinherit)) { perror("pthread_mutexattr_init"); exit(3); } if (pthread_mutexattr_setprotocol(&mutex_prioinherit, PTHREAD_PRIO_INHERIT)) { perror("pthread_mutexattr_setprotocol"); exit(4); } if (pthread_mutex_init(&shared_mutex, &mutex_prioinherit)) { perror("pthread_mutex_init"); exit(5); } for (i = 0; i < TASK_NUM; i++) { ids[i] = i + 1; args[i][0] = &tp; args[i][1] = &ids[i]; pthread_create(&threads[i], NULL, func, args[i]); } for (i = 0; i < TASK_NUM; i++) { pthread_join(threads[i], NULL); } if (pthread_mutexattr_destroy(&mutex_prioinherit)) { perror("pthread_mutexattr_destroy"); exit(6); } if (pthread_mutex_destroy(&shared_mutex)) { perror("pthread_mutexattr_destroy"); exit(7); } return 0; } |

実行結果は以下になります.

上図の通り,タスクが3->1->3->1->2->3の順番で実行することを確認しましょう!

【第30回】元東大教員から学ぶリアルタイムシステム「Priority Inheritance Protocol」で述べた通り,優先度逆転をPIPで解決できていることがわかります.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

$ gcc priority_inheritance.c $ sudo ./a.out Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. Task 3 enters a critical section. Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. Task 3 leaves a critical section. Task 1 enters a critical section. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 1 leaves a critical section. Task 1 begins execution. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 is running on cpu 0. Task 1 ends execution. Task 2 begins execution. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 is running on cpu 0. Task 2 ends execution. Task 3 begins execution. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 is running on cpu 0. Task 3 ends execution. |

まとめ

C言語でLinuxにおける資源アクセスプロトコルPIPの実装を紹介しました.

具体的には,優先度逆転が発生するコードとPIPで解決するコードを解説しました.

C言語を独学で習得することは難しいです.

私にC言語の無料相談をしたいあなたは,公式LINE「ChishiroのC言語」の友だち追加をお願い致します.

私のキャパシティもあり,一定数に達したら終了しますので,今すぐ追加しましょう!

独学が難しいあなたは,元東大教員がおすすめするC言語を学べるオンラインプログラミングスクール5社で自分に合うスクールを見つけましょう.後悔はさせません!